Modernization of Card Services Platform for GlobalBank Financial Services

Name and Sector of Client:

- Client Name: A Leading Industry in the Digital Payments Landscape

- Sector: Financial Services and Banking

Services Include:

- Electronic Payment services

- Issuing of Biometric Including cards such as: – (Debit Cards, Credit Cards, Prepaid Cards, Gift Cards)

Primary Work:

- A Leading Industry in the Digital Payments Landscape enables holders to pay at all points of sale and withdraw from ATMs

- Risk management and credit assessment

- Digital payment systems Digital payment systems

- Ensuring Regulatory Compliance and Reporting

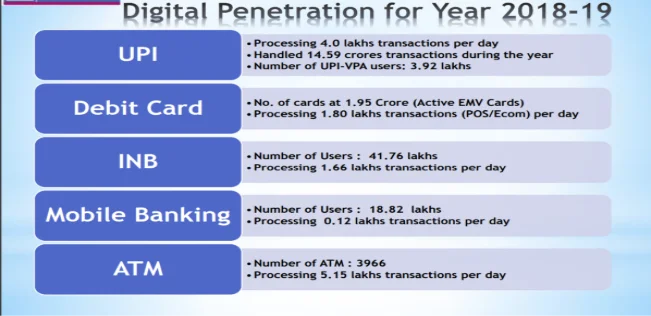

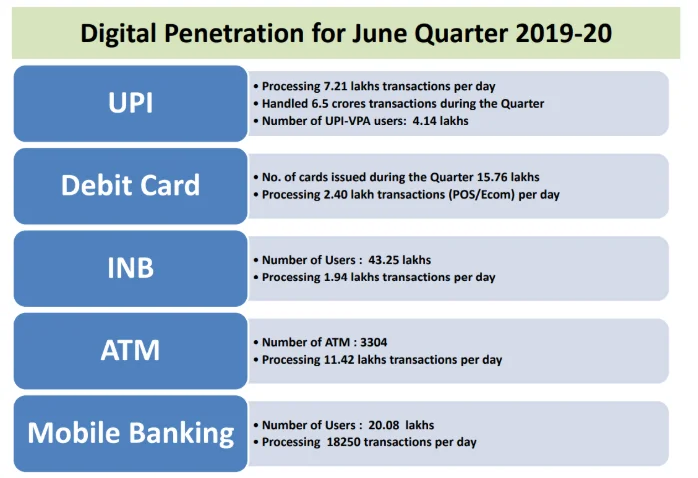

Digital Penetration of the transactions held as per the previous year's data: –

Year 2018-2019: –

Year 2019-2020: –

Background:

GlobalBank, a distinguished financial institution with a global footprint serving over 5 million customers worldwide, is spearheading an ambitious digital transformation initiative. At the heart of this transformation is the modernization of its card services platform, addressing the evolving needs of its diverse customer base across multiple regions. This strategic shift comes as GlobalBank experiences a remarkable 25% year-over-year growth in its customer base, necessitating a more robust and scalable infrastructure.

Overview of Challenges in Companies Existing Architecture: –

The initial tightly coupled architecture of the payment integration platform faced bottlenecks, high operational costs, latency, and security vulnerabilities due to direct service-to-service communication and the absence of a dedicated message broker. To resolve these challenges, we transitioned to a microservices-based architecture powered by RabbitMQ, ensuring asynchronous communication and scalability.

Problems Identified that needs a solution: –

- Scalability Issues:

- Lack of Message Broker: Without a message broker, the application struggles with handling high volumes of transactions efficiently. This is because there is no effective way to decouple services, leading to potential bottlenecks as the load increases.

- Direct Service-to-Service Communication: Each component communicates directly with others, which can lead to tightly coupled architecture. This makes it difficult to scale individual components of the application independently based on demand.

- Lack of Message Broker: Without a message broker, the application struggles with handling high volumes of transactions efficiently. This is because there is no effective way to decouple services, leading to potential bottlenecks as the load increases.

- Direct Service-to-Service Communication: Each component communicates directly with others, which can lead to tightly coupled architecture. This makes it difficult to scale individual components of the application independently based on demand.

- Cost Management:

- Large Instances Requirement: As the application are made to setup the direct communication between services, the application requires larger instances to handle increased load. This approach is not cost-effective as it involves higher operational costs in terms of both hardware and maintenance.

- Inefficiency in Resource Utilization: Without the ability to scale components independently, there is often either an over-provisioning or under-utilization of resources, leading to increased costs without proportional benefits.

- Large Instances Requirement: As the application are made to setup the direct communication between services, the application requires larger instances to handle increased load. This approach is not cost-effective as it involves higher operational costs in terms of both hardware and maintenance.

- Inefficiency in Resource Utilization: Without the ability to scale components independently, there is often either an over-provisioning or under-utilization of resources, leading to increased costs without proportional benefits.

- Performance Degradation:

- Increased Latency: The direct communication between services can lead to increased latency as the system scales. This is particularly problematic for a payment application where transaction speed is critical.

- Risk of Data Loss: In high-load scenarios, the lack of a message broker can lead to data being lost or not processed in a timely manner, as there is no buffer to handle sudden spikes in traffic or system failures.

- Increased Latency: The direct communication between services can lead to increased latency as the system scales. This is particularly problematic for a payment application where transaction speed is critical.

- Risk of Data Loss: In high-load scenarios, the lack of a message broker can lead to data being lost or not processed in a timely manner, as there is no buffer to handle sudden spikes in traffic or system failures.

- Operational Complexity:

- Difficulty in Maintenance and Upgrades: With a tightly coupled system, updating or maintaining individual components can become complex and risky. This can lead to longer downtime during upgrades or maintenance, affecting the overall service availability.

- Challenges in Monitoring and Troubleshooting: it becomes more difficult to monitor the system effectively and identify the root causes of issues quickly.

- Difficulty in Maintenance and Upgrades: With a tightly coupled system, updating or maintaining individual components can become complex and risky. This can lead to longer downtime during upgrades or maintenance, affecting the overall service availability.

- Challenges in Monitoring and Troubleshooting: it becomes more difficult to monitor the system effectively and identify the root causes of issues quickly.

- Security Vulnerabilities:

- Increased Exposure to Attacks: Direct service-to-service communications without a secure message broker can expose more endpoints, potentially increasing the attack surface for malicious actors.

- Difficulty in Implementing Consistent Security Policies: Enforcing security policies consistently across all components can be challenging without a centralized control mechanism like a message broker.

- Increased Exposure to Attacks: Direct service-to-service communications without a secure message broker can expose more endpoints, potentially increasing the attack surface for malicious actors.

- Difficulty in Implementing Consistent Security Policies: Enforcing security policies consistently across all components can be challenging without a centralized control mechanism like a message broker.

- Compliance and Regulatory Challenges:

- Data Governance Issues: Ensuring compliance with data protection regulations (such as PCI DSS and SOC1 and SOC2) can be more difficult when data flows are not managed centrally.

- Audit Complexity: auditing the system to ensure compliance with regulatory requirements can become more complex and resource-intensive.

- Data Governance Issues: Ensuring compliance with data protection regulations (such as PCI DSS and SOC1 and SOC2) can be more difficult when data flows are not managed centrally.

- Audit Complexity: auditing the system to ensure compliance with regulatory requirements can become more complex and resource-intensive.

The payment integration platform was redesigned using a microservices architecture with RabbitMQ as a message broker, ensuring loose coupling and eliminating direct service-to-service communication. Each module processes messages asynchronously, enhancing efficiency.

This event-driven system handles 100,000+ daily card transactions (credit, debit, and specialized banking cards) across 22 microservices while achieving 1,000 TPS. Integrated with Mastercard and Visa, it enables real-time authorization and processing. RabbitMQ facilitates 50,000 messages per minute with an average 200ms response time. The platform supports multiple banking partners and 15 card products, maintaining strict compliance with PCI-DSS, SOC 1 & 2 Type II, and ensuring secure transaction log retention.

To solve the above-mentioned Problems, we have proposed architecture of the solution: –

To address the scalability issues, cost management challenges, performance degradation, operational complexity, security vulnerabilities, and compliance and regulatory challenges in your application, leveraging AWS services can provide a comprehensive solution. Here is how each service can help solve these problems: –

- Scalability Issues: –

- Amazon MQ: To address the critical need for a dedicated message broker, it has been proposed to establish a RabbitMQ cluster utilizing Amazon MQ services. This solution leverages Amazon MQ's cluster-based approach, which incorporates VPC endpoint-based secure communication. It also supports the secure AMQPS protocol, which is compatible with the application's requirements for transmitting messages across various application modules. By implementing a highly available Amazon MQ cluster, we ensure robust, secure, and efficient message handling, thereby enhancing overall system reliability and performance. This strategic deployment is aimed at solving significant communication and operational challenges within our infrastructure. By implementing Amazon MQ, a managed message broker service, you can efficiently handle high volumes of transactions. It allows decoupling of services, which helps in managing load more effectively and avoids bottlenecks, facilitating easier scaling of individual components.

- Amazon EKS (Elastic Kubernetes Service): EKS supports the deployment and management of containerized applications using Kubernetes, which inherently supports microservices architecture. This allows for independent scaling of services and improves the overall scalability of the application. Kubernetes enables us provides us facilities like HPA and Cluster Auto-Scaler (KARPENTER) which helps in on-demand scaling and the even the application are called the payment integration platform-database-upgrade tools which needs to the executed as init-containers. It natively supports secret management and load balancing by utilizing the concepts of deployment, service, and ingress.

- Amazon MQ: To address the critical need for a dedicated message broker, it has been proposed to establish a RabbitMQ cluster utilizing Amazon MQ services. This solution leverages Amazon MQ's cluster-based approach, which incorporates VPC endpoint-based secure communication. It also supports the secure AMQPS protocol, which is compatible with the application's requirements for transmitting messages across various application modules. By implementing a highly available Amazon MQ cluster, we ensure robust, secure, and efficient message handling, thereby enhancing overall system reliability and performance. This strategic deployment is aimed at solving significant communication and operational challenges within our infrastructure. By implementing Amazon MQ, a managed message broker service, you can efficiently handle high volumes of transactions. It allows decoupling of services, which helps in managing load more effectively and avoids bottlenecks, facilitating easier scaling of individual components.

- Amazon EKS (Elastic Kubernetes Service): EKS supports the deployment and management of containerized applications using Kubernetes, which inherently supports microservices architecture. This allows for independent scaling of services and improves the overall scalability of the application. Kubernetes enables us provides us facilities like HPA and Cluster Auto-Scaler (KARPENTER) which helps in on-demand scaling and the even the application are called the payment integration platform-database-upgrade tools which needs to the executed as init-containers. It natively supports secret management and load balancing by utilizing the concepts of deployment, service, and ingress.

- Cost Management:

- Amazon EC2 (Elastic Compute Cloud): By using Amazon EC2 with managed node groups, you gain the flexibility to run containers with the added control of managing server clusters. This approach allows for precise resource allocation, which is essential for optimizing operational costs. Managed node groups in EC2 simplify the deployment and scaling of applications by automating the provisioning and lifecycle management of servers, thereby reducing the administrative burden and cost associated with manual cluster management. This setup not only ensures efficient resource utilization but also reduces the likelihood of over-provisioning, leading to more cost-effective operations.

- Amazon RDS (Relational Database Service): RDS makes it easy to set up, operate, and scale a relational database in the cloud. It provides cost-efficient and resizable capacity while automating time-consuming administration tasks such as hardware provisioning, database setup, patching, and backups.

- Amazon EC2 (Elastic Compute Cloud): By using Amazon EC2 with managed node groups, you gain the flexibility to run containers with the added control of managing server clusters. This approach allows for precise resource allocation, which is essential for optimizing operational costs. Managed node groups in EC2 simplify the deployment and scaling of applications by automating the provisioning and lifecycle management of servers, thereby reducing the administrative burden and cost associated with manual cluster management. This setup not only ensures efficient resource utilization but also reduces the likelihood of over-provisioning, leading to more cost-effective operations.

- Amazon RDS (Relational Database Service): RDS makes it easy to set up, operate, and scale a relational database in the cloud. It provides cost-efficient and resizable capacity while automating time-consuming administration tasks such as hardware provisioning, database setup, patching, and backups.

- Performance Degradation: –

- AWS Network Load Balancer (NLB): NLB is designed to handle millions of requests per second while maintaining ultra-low latencies, making it ideal for handling volatile workloads and single-digit millisecond latency. NLB can efficiently distribute traffic across multiple downstream instances to improve the performance of your applications.

- AWS Application Load Balancer (ALB): ALB is best suited for load balancing of HTTP and HTTPS traffic and provides advanced request routing targeted at the delivery of modern application architectures, including microservices and containers. ALB can conditionally route requests based on the content of the request, which enhances the responsiveness and scalability of applications.

- Kubernetes Ingress Resource: Ingress in Kubernetes is an API object that manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting. It is a powerful tool for routing traffic and managing the services within a Kubernetes cluster, which helps in reducing latency and increasing the processing speed of requests.

- AWS Network Load Balancer (NLB): NLB is designed to handle millions of requests per second while maintaining ultra-low latencies, making it ideal for handling volatile workloads and single-digit millisecond latency. NLB can efficiently distribute traffic across multiple downstream instances to improve the performance of your applications.

- AWS Application Load Balancer (ALB): ALB is best suited for load balancing of HTTP and HTTPS traffic and provides advanced request routing targeted at the delivery of modern application architectures, including microservices and containers. ALB can conditionally route requests based on the content of the request, which enhances the responsiveness and scalability of applications.

- Kubernetes Ingress Resource: Ingress in Kubernetes is an API object that manages external access to the services in a cluster, typically HTTP. Ingress can provide load balancing, SSL termination, and name-based virtual hosting. It is a powerful tool for routing traffic and managing the services within a Kubernetes cluster, which helps in reducing latency and increasing the processing speed of requests.

- Operational Complexity: –

Terraform, CloudWatch, and Dynatrace can help address the operational complexities of a tightly coupled Kubernetes environment- Terraform Automates infrastructure provisioning, eliminating manual errors and inconsistencies. It ensures all environments (dev, test, prod) are identical, minimizing discrepancies. Including Version Control with terraform environment helps us for easier rollbacks and auditing related to infrastructure.

- CloudWatch For monitoring and alerting using SNS: – CloudWatch basically helps in the monitoring the RDS CPU utilization and Memory Metrics and other metric along with with performance metrics. Along with it helps to monitor to the dedicated message broker with set up using the Amazon MQ. It also helps us to set up alarms by creating the SNS topics in form emails and slack notification to the DevOps teams to check the infrastructure bottlenecks in case of Amazon RDS and Amazon MQ are in concern.

- Dynatrace For Kubernetes Setup: – Dynatrace is a powerful monitoring solution for Kubernetes environments, particularly valuable for fintech applications like debit/credit card issuing. It provides deep visibility into application performance, enabling developers to quickly pinpoint and resolve issues. By automatically capturing and analysing metrics, traces, and logs across the entire application stack, Dynatrace helps identify performance bottlenecks, such as slow database queries or network latency, impacting transaction processing. This real-time insight empowers teams to proactively address issues before they significantly impact customer experience or lead to financial losses. Furthermore, Dynatrace facilitates efficient log management by centralizing and correlating log data with other performance metrics, making it easier to identify the root cause of errors and expedite troubleshooting. By integrating with existing alerting systems, Dynatrace can proactively notify teams of critical issues, minimize downtime and ensure business continuity

- Terraform Automates infrastructure provisioning, eliminating manual errors and inconsistencies. It ensures all environments (dev, test, prod) are identical, minimizing discrepancies. Including Version Control with terraform environment helps us for easier rollbacks and auditing related to infrastructure.

- CloudWatch For monitoring and alerting using SNS: – CloudWatch basically helps in the monitoring the RDS CPU utilization and Memory Metrics and other metric along with with performance metrics. Along with it helps to monitor to the dedicated message broker with set up using the Amazon MQ. It also helps us to set up alarms by creating the SNS topics in form emails and slack notification to the DevOps teams to check the infrastructure bottlenecks in case of Amazon RDS and Amazon MQ are in concern.

- Dynatrace For Kubernetes Setup: – Dynatrace is a powerful monitoring solution for Kubernetes environments, particularly valuable for fintech applications like debit/credit card issuing. It provides deep visibility into application performance, enabling developers to quickly pinpoint and resolve issues. By automatically capturing and analysing metrics, traces, and logs across the entire application stack, Dynatrace helps identify performance bottlenecks, such as slow database queries or network latency, impacting transaction processing. This real-time insight empowers teams to proactively address issues before they significantly impact customer experience or lead to financial losses. Furthermore, Dynatrace facilitates efficient log management by centralizing and correlating log data with other performance metrics, making it easier to identify the root cause of errors and expedite troubleshooting. By integrating with existing alerting systems, Dynatrace can proactively notify teams of critical issues, minimize downtime and ensure business continuity

- Security Vulnerabilities: –

By leveraging services like AWS WAF, AWS Secrets Manager, AWS KMS, and the principle of least privilege, combined with Terraform-managed IAM roles and a secure deployment strategy (private Kubernetes cluster with VPN access for private applications and NLB/ALB exposure for public applications), and robust image scanning with ECR and SNYK, we can significantly mitigate security vulnerabilities.- AWS WAF acts as a web application firewall, protecting publicly accessible applications from common web exploits like SQL injection and cross-site scripting, dedicated IP restrictions, DDOS attacks etc.

- VPN based communication is setup for the privately accessible application to only accessible over private OpenVPN and each user are created with their own users and MFA enabled.

- AWS Secrets Manager securely stores and retrieves sensitive data like encrypted databases passwords, encrypted JWT tokens, Encrypted keystore and trust store passwords, database credentials, preventing them from being hardcoded in application code.

- AWS KMS provides encryption capabilities, safeguarding sensitive data at rest and we have used DigiCert certification by utilizing AWS ACM for TLS termination and encrypting the data in transit.

- Implementing the principle of least privilege ensures that each user and service has only the necessary permissions to perform their functions, minimizing the impact of potential breaches.

- By utilizing Terraform for infrastructure provisioning and IAM role management, we enforce consistent security policies across all environments.

- Finally, ECR scanning and SNYK vulnerability assessments help identify and remediate security flaws in container images before they are deployed, enhancing the overall security posture of the application.

- AWS WAF acts as a web application firewall, protecting publicly accessible applications from common web exploits like SQL injection and cross-site scripting, dedicated IP restrictions, DDOS attacks etc.

- VPN based communication is setup for the privately accessible application to only accessible over private OpenVPN and each user are created with their own users and MFA enabled.

- AWS Secrets Manager securely stores and retrieves sensitive data like encrypted databases passwords, encrypted JWT tokens, Encrypted keystore and trust store passwords, database credentials, preventing them from being hardcoded in application code.

- AWS KMS provides encryption capabilities, safeguarding sensitive data at rest and we have used DigiCert certification by utilizing AWS ACM for TLS termination and encrypting the data in transit.

- Implementing the principle of least privilege ensures that each user and service has only the necessary permissions to perform their functions, minimizing the impact of potential breaches.

- By utilizing Terraform for infrastructure provisioning and IAM role management, we enforce consistent security policies across all environments.

- Finally, ECR scanning and SNYK vulnerability assessments help identify and remediate security flaws in container images before they are deployed, enhancing the overall security posture of the application.

- Compliance and Regulatory challenges, by using all the above-mentioned points the newly designed microservices architecture is PCI-DSS and SOC 1 and SOC2 type complaint.

The Applications Overview:

- Access Control Server: – It enables cardholder authentication for card issuers. It can be deployed directly within a bank for utilisation by multiple issuers. It is built with compatibility and efficiency in mind resulting in a solution that supports 3D Secure 1 (3DS1), 3D Secure 2 (3DS2), while helping issuers meet the EU Payment Service Directive (PSD2) compliance. It reduces false declines and cart abandonment and improves user experience by providing a new frictionless flow during the payment process.

- Acquiring -UI: –This module provides a user interface for working with the PAYMENT INTEGRATION PLATFORM applications.

- Issuing-UI: – This module basically provides a user interface for working and issuing cards and making all types of customizations on the cards based on the user needs.

- Authentication-Module: –This module is responsible for providing card authorization such as cryptography verification, PIN, CVV verification, OTP verification.

- Authentication-Reports: –This module basically provides capabilities to creating reports based on the Authentication module and then store them as the records of the transactions for the bank for compliance issues.

- BackOffice-Application: –This module that provides transaction processing and management of the Issuing and Acquiring parts of the application. This application basically performs the business logic of the card Issuing and Acquiring application and maintain the business logic for providing transaction processing.

- Clearing: –This module is responsible for providing management of the clearing process of the transactions of the users.

- Card-Production: –This module is responsible for cards personalization, preparation of files for personalization bureau.

- Fraud Prevention: –This module is responsible for fraud prevention functionality to follow rules created by the world bank organization like (Defining payment procedures, defining payment terms, assessing costs, Using card payments, Using closed loop systems)

- Pin-Change-Server:– The module that provides PIN set/change functionality. According to security requirements, the module is divided into two components.

Challenges faced by client after the deployment of the applications over EKS:

- Data Consistency Challenges:

- Maintaining transaction data integrity during deployments and encryption keys for the application.

- Ensuring zero transaction loss during updates

- Managing database schema changes without downtime

- Maintaining transaction data integrity during deployments and encryption keys for the application.

- Ensuring zero transaction loss during updates

- Managing database schema changes without downtime

- Deployment Pipeline Challenges:

- Implementing rolling update deployment strategies for zero downtime

- Managing database migrations during deployments

- Automating security scans in the deployment pipeline

- Ensuring consistent configuration across environments

- Implementing rolling update deployment strategies for zero downtime

- Managing database migrations during deployments

- Automating security scans in the deployment pipeline

- Ensuring consistent configuration across environments

- Performance Challenges:

- Meeting transaction processing SLAs

- Handling peak load periods

- Managing network latency across availability zones

- Meeting transaction processing SLAs

- Handling peak load periods

- Managing network latency across availability zones

- Monitoring and Logging Challenges:

- Implementing comprehensive transaction tracking

- Setting up proper alerting thresholds

- Maintaining audit logs for compliance requirements

- Real-time monitoring of transaction success rates

- Implementing comprehensive transaction tracking

- Setting up proper alerting thresholds

- Maintaining audit logs for compliance requirements

- Real-time monitoring of transaction success rates

- Setting of RabbitMQ Cluster:

- According to the Application the RabbitMQ cluster must be high available and with low latency and should a service that requires less management.

- It should be a three-node cluster and messages in transit and rest should be encrypted as it related to the payment application.

- According to the Application the RabbitMQ cluster must be high available and with low latency and should a service that requires less management.

- It should be a three-node cluster and messages in transit and rest should be encrypted as it related to the payment application.

For the solution of Above challenges, we have used multiple AWS services after deploying the application over EKS as mentioned below: –

- For Data Consistency challenges we have used AWS EFS for maintaining the transactional data and error, archived, incoming, and outgoing files.

- Why to choose EFS: – The Application are configured to store the transactional data in shared file storage also the application is encrypted using different keys of different payment gateways (Mastercard, Visa Card etc) which is being used by every application module. Therefore, we need to choose a storage which can be mounted as the file system over multiple application modules using Persistent volume and Persistent Volume Claims. Therefore, EBS volume as block storage cannot be configured for this application for maintaining the data consistency and encryption keys.

- The reason of not moving for forward with S3 is that Amazon S3 is an object storage platform that uses a simple API for storing and accessing data and the application are created and configured to use NFS clients for storing the files and encryption keys in a shared storage. And the client does not want to make the any changes to the existing application code.

- Why to choose EFS: – The Application are configured to store the transactional data in shared file storage also the application is encrypted using different keys of different payment gateways (Mastercard, Visa Card etc) which is being used by every application module. Therefore, we need to choose a storage which can be mounted as the file system over multiple application modules using Persistent volume and Persistent Volume Claims. Therefore, EBS volume as block storage cannot be configured for this application for maintaining the data consistency and encryption keys.

- The reason of not moving for forward with S3 is that Amazon S3 is an object storage platform that uses a simple API for storing and accessing data and the application are created and configured to use NFS clients for storing the files and encryption keys in a shared storage. And the client does not want to make the any changes to the existing application code.

- For Deployment pipeline challenges we have used multiple tools that are opensource and used according to the industry standards: –

- For Implementing rolling deployments, we have used automated Pipelines using open-source tools like Jenkins and Argo CD which helps in automatic configuration to the changes based on the code changes and the build tag changes in the application manifest files.

- For managing the Data upgrades, we have created the Init containers that runs in the EKS environments which are called the Payment integration platform-Upgrade-Tools that are responsible for making changes in the databases schema and tables and after the process the init containers are removed from the cluster. For Database we have Amazon RDS for PostgreSQL instances.

- For Automating the security scans, we have automated the pipeline and configured SNYK for both application code and infrastructure code level of vulnerability scanning and to check the outed packages and the also any kind of harmful packages used in the Docker files.

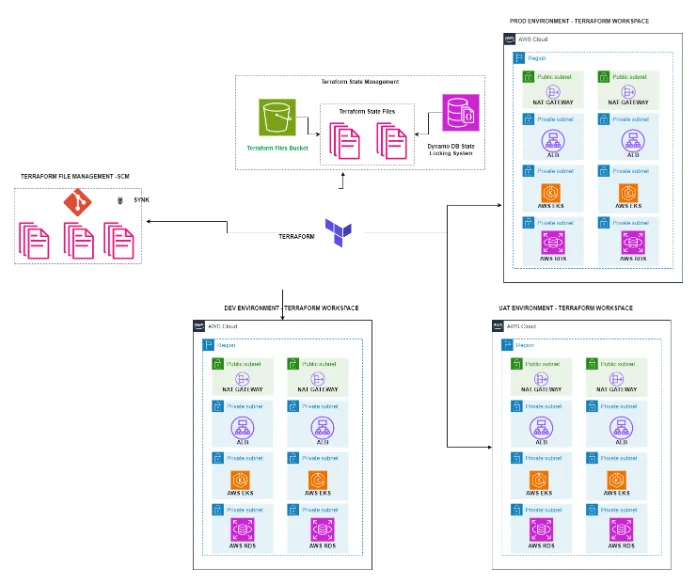

- For Ensuring the consistency across multiple environments we have Terraform workspace method to distinguish between the dev, prod, and Uat Environments.

- For Implementing rolling deployments, we have used automated Pipelines using open-source tools like Jenkins and Argo CD which helps in automatic configuration to the changes based on the code changes and the build tag changes in the application manifest files.

- For managing the Data upgrades, we have created the Init containers that runs in the EKS environments which are called the Payment integration platform-Upgrade-Tools that are responsible for making changes in the databases schema and tables and after the process the init containers are removed from the cluster. For Database we have Amazon RDS for PostgreSQL instances.

- For Automating the security scans, we have automated the pipeline and configured SNYK for both application code and infrastructure code level of vulnerability scanning and to check the outed packages and the also any kind of harmful packages used in the Docker files.

- For Ensuring the consistency across multiple environments we have Terraform workspace method to distinguish between the dev, prod, and Uat Environments.

- For Performance challenges we have configured tools like your Dynatrace and Portainer Dashboard for monitor the metrics such as the memory, CPU metrics and, we have implemented Karpenterin the environment which helps in vertical scaling according to the requirement and HPA for horizontal scaling of the Pods based on the load and traffic on the applications.

- For monitoring and Logging Purposes we have implemented the solution such as the Dynatrace which provide the detailed logs of the application pods and helps with a Dashboard that helps a clear understanding of the application CPU and memory metrics according to the pods and Nodes CPU and Memory Metrics to prevent any kind of Bottlenecks.

- For Setting Rabbit MQ cluster, we have used the Amazon MQ services for setting up a highly available cluster for the application and it also using the Amazon MQ service endpoint resolves to a VPC endpoint which makes more secure for application communication and the data in transit and REST are encrypted using AWS EKS for data in rest and Amazon MQ use SSL by Amazon for the encrypting the data in transit.

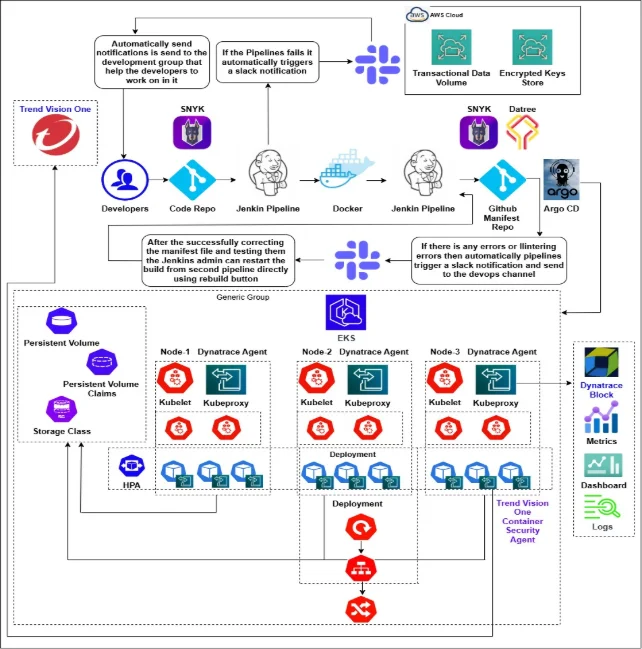

Detailed Description of Proposed Solution Pipeline & Architecture:

Based on the context of the payment gateway application requiring high availability and frequent deployments, here is a proposed solution architecture taking in consideration all the above challenges into consideration: –

- Infrastructure as Code (Terraform): – Taking consideration of the payment application for maintaining the architecture environments is highly necessary. Therefore, we have used terraform in our proposed solution as it manage AWS services and we have integrated the Version control system with it to store the terraform file and the terraform files are applied to make the changes to the existing architecture in the AWS account after it perfectly scanned by using Snyk. Version control also helps in auditing and perform rollbacks for the infrastructure changes across environments. Also, for the state management and locking system we have used AWS S3 bucket and AWS Dynamo DB to manage terraform state files locking architecture.

- Continuous Build Tools (Jenkins):- Jenkins is deployed in a standalone server which act as a building tool which basically helps in pulling the code from the Version control system repo and then we have created a pipeline job which is multi stage pipeline that basically firstly runs the SNYK scripts to check the vulnerability issues and if there is any vulnerabilities in the code SNYK automatically sends the notification report about the vulnerabilities from assigned authorities and the pipelines fails and a notification is tiggered to the respective development group using slack webhook integration. But if the scans report is okay then the pipeline uses the docker to build the images of application and the pipeline again uses the SNYK to scans the docker images to AWS ECR repo and where the images is again scanned for by the ECR scanning and then the second pipeline is triggered if every stage is a success.

- Deployment Environment: –

- Other Tools which play an important role in the deployment are: –

- SNYK: – Snyk is a powerful tool that helps organizations enhance their security posture by focusing on identifying and fixing vulnerabilities in various aspects of software development and infrastructure management.

- it provides comprehensive vulnerability scanning for application dependencies. It supports a wide range of programming languages and package managers, and it integrates with development workflows to scan code repositories. Snyk continuously monitors the projects and alerts developers when new vulnerabilities are discovered in the dependencies. This proactive approach helps in mitigating risks before they can be exploited.

- its Infrastructure as Code (IaC) scanning capabilities allow developers to detect misconfigurations and security risks within their IaC scripts, such as Terraform and Kubernetes configuration files. By scanning IaC scripts, Snyk identifies potential security issues like overly permissive access controls, security group misconfigurations, or exposure of sensitive data. This helps ensure that the infrastructure provisioning scripts adhere to security best practices, reducing the risk of deploying vulnerable infrastructure.

- It helps in scanning Docker images for known vulnerabilities in the operating system packages and application dependencies. It integrates with CI/CD pipelines to automatically scan images during the build process and can also scan images stored in Docker registries. Snyk provides detailed reports on identified vulnerabilities, including their paths and advice on how to remediate them. This helps developers and security teams address security issues within container images before they are deployed to production.

- Trend Vision One :- Trend Vision One is container runtime security tool which can be integrated into the Kubernetes environment using the Helm and it helps in checking the containers at the runtime to base on the rules attached in the trend vision one dashboard that prevent any container running with the root privilege's also It scans the pods whenever a new Kubernetes deployment manifests is applied in any namespaces and monitors in the container runtimes security breaches , prevent them and logs then in the trend vision one dashboard. This basically helps to prevent any kind of back door entry in the containerized environments.

- Chekov: – It is command Line tool used for checking the syntax and linting of the terraform code based on the policies which prevents the terraform code to be syntactically correct and proper architecture of the code is followed.

- Portainer: – Portainer is your container management software to deploy, troubleshoot, and secure applications across cloud. It helps to create an application portal to restart the pods, deployment and manage the user access from its dashboard and ease of the using the application.

- Kyverno: – It is open-source tool which is CNCF graduated that helps to prevent errored yaml to apply in the cluster. We generally check the manifest files with the kyverno policies and then the required files are applied in the cluster.

- Datree: – It linter for the Kubernetes manifest files which checks that the Kubernetes manifest file have correct syntactically correct.

- GitOps: –Argo CD is used in order carry out the automatic deployments and automate the CI/CD process.

- SNYK: – Snyk is a powerful tool that helps organizations enhance their security posture by focusing on identifying and fixing vulnerabilities in various aspects of software development and infrastructure management.

- it provides comprehensive vulnerability scanning for application dependencies. It supports a wide range of programming languages and package managers, and it integrates with development workflows to scan code repositories. Snyk continuously monitors the projects and alerts developers when new vulnerabilities are discovered in the dependencies. This proactive approach helps in mitigating risks before they can be exploited.

- its Infrastructure as Code (IaC) scanning capabilities allow developers to detect misconfigurations and security risks within their IaC scripts, such as Terraform and Kubernetes configuration files. By scanning IaC scripts, Snyk identifies potential security issues like overly permissive access controls, security group misconfigurations, or exposure of sensitive data. This helps ensure that the infrastructure provisioning scripts adhere to security best practices, reducing the risk of deploying vulnerable infrastructure.

- It helps in scanning Docker images for known vulnerabilities in the operating system packages and application dependencies. It integrates with CI/CD pipelines to automatically scan images during the build process and can also scan images stored in Docker registries. Snyk provides detailed reports on identified vulnerabilities, including their paths and advice on how to remediate them. This helps developers and security teams address security issues within container images before they are deployed to production.

- Trend Vision One :- Trend Vision One is container runtime security tool which can be integrated into the Kubernetes environment using the Helm and it helps in checking the containers at the runtime to base on the rules attached in the trend vision one dashboard that prevent any container running with the root privilege's also It scans the pods whenever a new Kubernetes deployment manifests is applied in any namespaces and monitors in the container runtimes security breaches , prevent them and logs then in the trend vision one dashboard. This basically helps to prevent any kind of back door entry in the containerized environments.

- Chekov: – It is command Line tool used for checking the syntax and linting of the terraform code based on the policies which prevents the terraform code to be syntactically correct and proper architecture of the code is followed.

- Portainer: – Portainer is your container management software to deploy, troubleshoot, and secure applications across cloud. It helps to create an application portal to restart the pods, deployment and manage the user access from its dashboard and ease of the using the application.

- Kyverno: – It is open-source tool which is CNCF graduated that helps to prevent errored yaml to apply in the cluster. We generally check the manifest files with the kyverno policies and then the required files are applied in the cluster.

- Datree: – It linter for the Kubernetes manifest files which checks that the Kubernetes manifest file have correct syntactically correct.

- GitOps: –Argo CD is used in order carry out the automatic deployments and automate the CI/CD process.

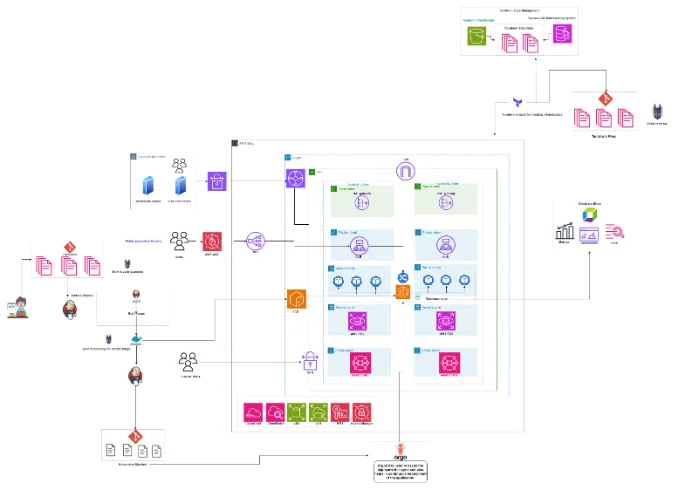

Architecture Diagram: –

- Infrastructure Architecture Diagram: –

- Kubernetes Application Deployment Pipeline Architecture Diagram: –

- Terraform Architecture Diagram: –

Outcomes and learnings: –

- Microservices improve scalability, maintainability, and resilience through loose coupling and independent scaling.

- Kubernetes orchestrates containers, enabling scalability, self-healing, and efficient resource management.

- Infrastructure as Code (IaC) automates provisioning, improves consistency, and facilitates collaboration

- CI/CD pipelines automate builds, tests, and deployments, improving efficiency and reducing time to market

- Comprehensive monitoring and logging enable proactive issue resolution and root cause analysis.

- Shared storage and managed database services ensure data consistency and improve performance