Case Study: Agentic GenAI for Contextual Code Assistance and Automated Infrastructure Provisioning

1. About the Client

The client is a B2B SaaS analytics company. Their platform enables 100+ enterprise customers to connect diverse data sources for advanced analytics, warehousing, and interactive reporting. All workloads are hosted in the AWS ap-south-1 (Mumbai) region, serving customers across multiple sectors including retail, manufacturing, and finance.

2. Business Challenges

Despite robust analytics offering, the client faced persistent technical and operational challenges:

- Performance Bottlenecks in Code Execution The built-in Jupyter-based notebook environment suffered from slow code execution, making data preparation and debugging cumbersome for end-users.

- Lack of Contextual Code Assistance Users had no real-time, intelligent support for writing and debugging code in the notebook interface, resulting in steep learning curves and slower adoption.

- Manual Infrastructure Provisioning Redshift-based data warehousing was provisioned manually, consuming engineering time and introducing configuration errors.

- Inconsistent Resource Optimization Without workload-aware recommendations, infrastructure often ran under- or over-provisioned, driving up costs.

- Limited Voice Interaction Capability Customers sought voice-driven query and coding assistance, but existing solutions were either cost-prohibitive or lacked contextual integration.

- LLM Usage Limitations Initially, the client relied on OpenAI and Anthropic APIs, but high client usage quickly led to exhausted credits, limiting scalability and creating unpredictable costs.

3. Solution Overview

Ancrew Global implemented an Agentic GenAI solution leveraging Amazon Bedrock (Claude 3.5 Sonnet, Claude 3.7 Sonnet) to provide contextual, low-latency code assistance and intelligent infrastructure provisioning.

Key innovations included:

- Contextual Code Assistant integrated into the notebook interface using LangChain, LangGraph, and LangSmith for agentic orchestration.

- Dynamic Kernel Provisioning on Amazon EKS with GPU or CPU nodes selected based on workload.

- AI-powered Resource Planner that analyzes dataset size, complexity, and query patterns, then provisions Amazon Redshift stacks via AWS CloudFormation.

- Private & Secure Data Connectivity using AWS PrivateLink, VPC Endpoints, and AWS KMS encryption.

- Cost-Efficient LLM Access via Bedrock ensuring pay-as-you-go scalability without exhausting API credits.

4. LLM Analysis

Token Usage and Cost Optimization:

OpenAI and Anthropic APIs incurred high costs due to token-heavy requests from enterprise customers. With Bedrock, token costs are predictable under a pay-as-you-go model, preventing credit exhaustion.

Challenge: Context Length & Accuracy

Large context windows led to inefficiency in older APIs. Bedrock Claude models offered longer context windows and better retrieval-augmented generation (RAG) alignment, reducing token waste.

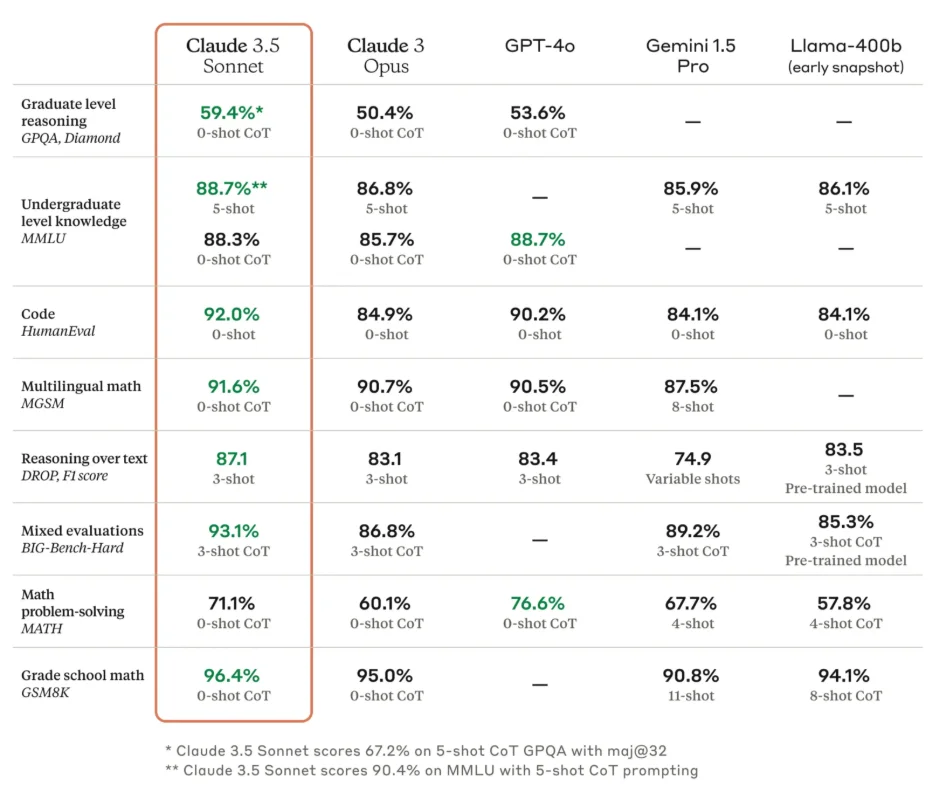

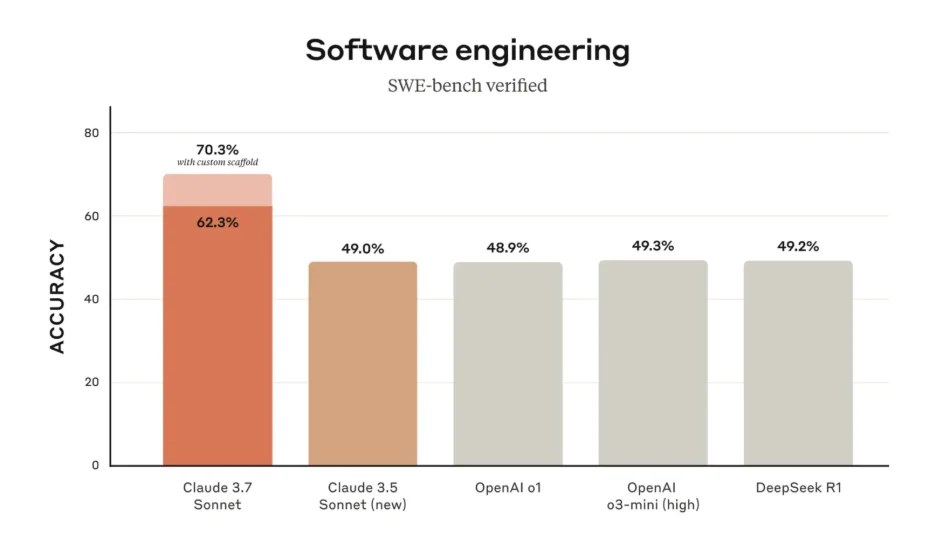

Latest Benchmark Evidence

Independent coding benchmarks confirmed that Claude 3.5/3.7 Sonnet outperformed other LLMs in programming and reasoning tasks, making it the optimal choice for contextual code assistance.

5. Key Differentiation from Older LLM Setup

Previous Setup (OpenAI/Anthropic APIs):

- Credits exhausted rapidly under enterprise load.

- Limited context handling in large datasets.

- No deep integration with AWS services.

New Setup (Claude via Bedrock):

- Scalable pay-as-you-go pricing ensures predictable cost management.

- Superior coding benchmarks ensure higher accuracy and developer productivity.

- Deeper AWS integration (EKS, Redshift, CloudFormation, VPC) enabling fully automated workflows.

- Extended context windows allow maintaining conversation and notebook continuity.

6. Key Solution Features

A. Agentic Code Assistance

- Claude Sonnet variants for contextual code suggestions and debugging.

- Maintains cell history and output context for improved continuity.

- Interactive prompt-chaining agents powered by LangChain, LangGraph, LangSmith.

B. Dynamic Notebook Execution Environment

- Amazon EKS clusters with auto-provisioned GPU/CPU kernels based on task complexity.

- Provisioned concurrency mode for low-latency execution.

C. AI-Powered Resource Planning & Provisioning

- Custom planner model recommends Redshift configurations based on workload analysis.

- CloudFormation stacks instantiated with variable parameters for storage, node type, and concurrency scaling.

D. Security & Compliance

- All data encrypted with AWS KMS.

- Private database connections via VPC endpoints and VPN integration.

- IAM roles for fine-grained access control.

E. Observability & Monitoring

- Amazon CloudWatch metrics for AI usage, provisioning time, and kernel utilization.

- Alerts for provisioning errors and model response anomalies via Amazon SNS.

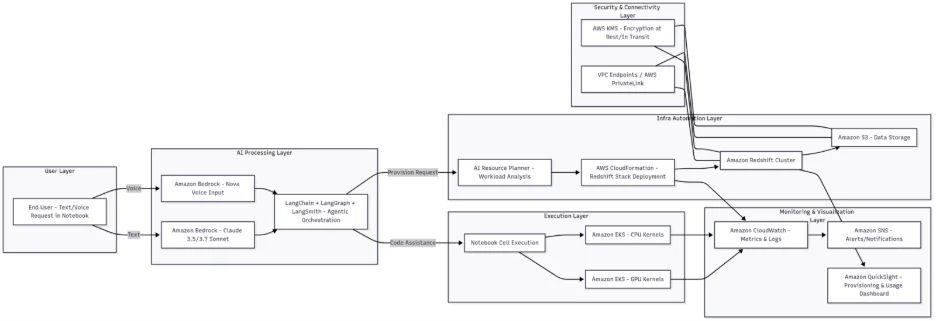

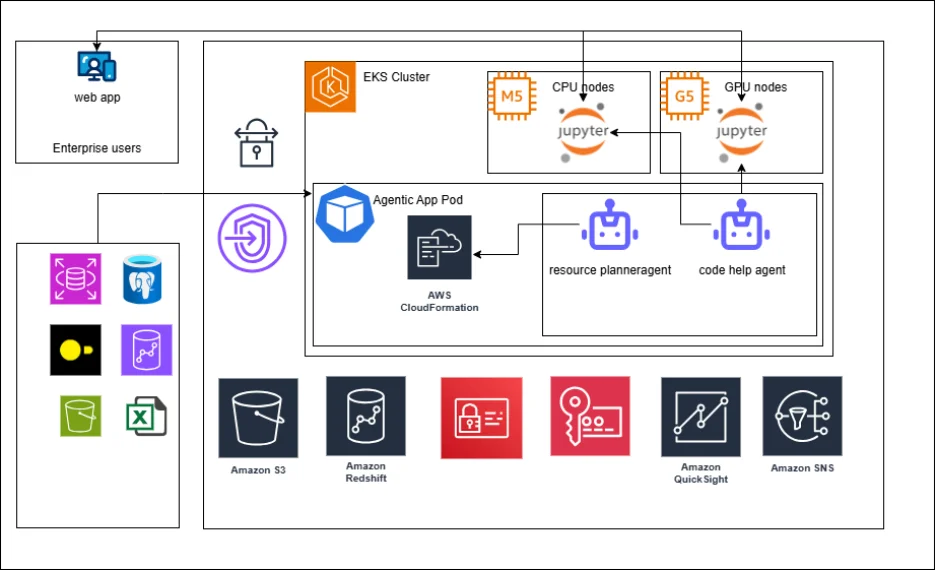

7. Solution Architecture

Architecture Flow:

- User initiates a request in the notebook.

- Bedrock Claude Sonnet or Nova processes the request with context from prior cells.

- LangGraph agents determine whether to Provide code/debug suggestions, or Trigger infrastructure provisioning via CloudFormation.

- EKS kernels are dynamically provisioned for execution.

- CloudFormation templates deploy Redshift stacks with AI-optimized parameters.

- All usage and provisioning metrics are captured in CloudWatch and visualized in QuickSight.

8. AWS Services Used:

- AI & LLM: Amazon Bedrock (Claude Sonnet, Nova)

- Orchestration: LangChain, LangGraph, LangSmith, AWS Lambda

- Compute: Amazon EKS (GPU & CPU)

- Data & Analytics: Amazon Redshift, Amazon S3

- IaC: AWS CloudFormation

- Security: AWS KMS, IAM, VPC Endpoints, PrivateLink

- Monitoring & Alerts: Amazon CloudWatch, SNS

- Visualization: Amazon QuickSight

9. Success Metrices

- 50% Reduction in Code-to-Execution Time – Faster notebook cell execution with contextual assistance.

- 70% Faster Infrastructure Provisioning – Redshift deployment reduced from hours to minutes.

- 25% Increase in Engineer Productivity – Less debugging, more analysis.

- 93% Accuracy in Infrastructure Recommendations – Matching optimal performance benchmarks.

- Cost Predictability Achieved – Eliminated API credit overruns with Bedrock pay-as-you-go model.

- Improved Developer Experience – Verified by coding benchmarks where Claude led industry LLMs in reasoning and programming accuracy.